Review - Burge, Tyler - The Origins of Objectivity

The topic of mental representation is philosophically problematic [10]. In this research project, a foundational tenet is that the brain is a computer, not just something that resembles one. Therefore we look to undergraduate computer science (CS101) for a sensible definition of representation. From CS101, we learn that computer software consists of a static part and a dynamic part[1]. The static part of software is called 'data structures' (problem 'state' repositories), while the dynamic part is called 'algorithms' (state transition plans or specifications). This division is nothing to do with computers-it existed long before them. Indeed, it can be better observed with a simple clock (ie a clockwork-operated chronometer) like the one with bells on top (alarm) that used to wake everyone up on a workday morning, using the following *techno-equivalence* heuristic- the 'plastic' bits that change shape or suffer relative motion, typically the hour and seconds hands, are the alarm clock's techno-equivalent to the computer's 'software', while all the other parts of the clock are its techno-equivalent to a computer's hardware. The clock's frame, its 'hardware', if you will, has two main roles- (a) provide a rigid mechanical framework (b) store and mete the mainspring's potential (strain) energy that empowers the motion of the hands around the clock's graduated circular dial, its 'face' if you will.

In a mechanical device such as a clock, functionality is achieved by means of physical characteristics, such as manufactured shape and strength of materials used. In a computer, functionality is achieved by treating information [11] itself as a construction material capable of being moulded and carved as required. It is the shape-shifting ability of data structures defined by the editors of the pre-compiled code source ('program') which governs their fitness for purpose.

Burge's issue with currently held ideas about mental representation seems to revolve around the differences between sensation (eg of a source of stimulus object) and perception. He seems to propose that perception is not just a higher order of sensation (defined as a 'bottom-up' or externally referenced information input), but that it necessarily involves an equal top-down contribution from the brain's internal resources, typically autobiographical memory and semantic knowledge. That this is true at higher, patently conscious, levels is rather obvious. Experiments clearly reveal how what the individual subject knows (eg via backmasking and shadowing techniques) changes what they report to have seen. However Burge claims that this is just as true of percepts which are generated by functional circuits operating at anatomically lower (sub-cortical) levels. This class of percepts are shared by members of the same species, they are species-derived, not individually acquired, semantic properties.

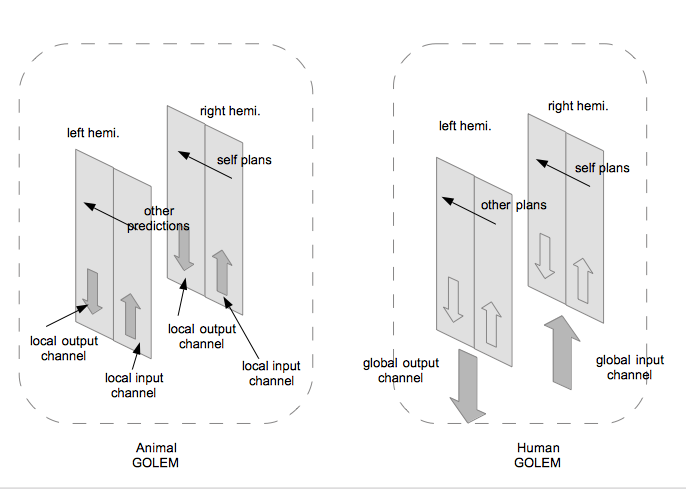

In GOLEM Theory (GT), this proposal is easy to accomodate, due to GT's cybernetic roots. In cybernetics, no measurement, derived from sensory or other inputs, is permitted to exist within the cognitive realm without also having its homeostatic setpoint value as its constant point of global reference. This is a similar notion to the need for all engineering numbers to be expressed in compatible units of physical magnitude (ie have SI dimensions). Except for the small worldwide community of cybernetic systems theorists and even smaller cohort of cybernetic system practitioners, such an apparently 'left-field' idea seems so unnecessary. For starters, it represents a doubling of the workload incurred by sensory computations. Also, it does not coexist happily with current mathematical modelling, which is an axiomatic (ie cybernetically feedforward) source of semantically descriptive data. Arguably, this is a consequence of the historical estrangement between mainstream math and 'vanilla' cybernetics. Yet, GT claims that this change to the scientific paradigm is needed to prevent continually encountering the kinds of subtle errors and problematic issues like those described by Burge in his monograph.

In the preface, Burge expresses one of his main aims thus- to explain the extreme primitiveness of conditions necessary and sufficient for this elementary type of representation <called> perception. He concludes that - non-perceptual sensory states are not instances of representation. GT takes this even further. Since bio-input (sensory) sub-system states cannot exist at higher levels without their inherited datum/s (ie set of matching cybernetic setpoint values), the only type of non-perceptual sensory state is one whose magnitude is insufficient to exceed the perceptual threshold derived from the current setpoint value. Unlike Burge, Philosophy was not my major. I originally started out in the more humble discipline of Engineering, where we are taught that the only sure way to understand a poorly written description of a complex system is to draw a diagram, then laboriously label all the parts. It doesn't have to be beautifully drawn or meticulously spell-checked [3], it can be a mud map crudely drawn by hand, just so long as it depicts the requisite level of detail needed to resolve ambiguous terminology, or highlight contradictory propositions within the journal article's main arguments.

In the figure below, all sensory inputs which impinge on a small patch of skin are then processed by the dendrites of the cutaneous neuroreceptor (CNR) located at or near the center of the patch. In cybernetic terms, the setpoint value or system threshhold is equivalent to the CNR's membrane resting potential. Unless the sum total of ALL synaptic inputs equals or exceeds the threshold, the neuron doesn't fire, and the strictly local sensory signal never gets the chance to merge with those from other local axons at the next level up. The further up the sensory hierarchy the signals travel, the larger the number of near neighbour (contextual) neurons available for semantic evaluation. It is precisely this characteristic aggregation of content-in-context semantic 'grounding' that creates every perceptually meaningful event, eg serial processing of linguistic symbols which constitute memory contents, or parallel processing of sensory signals produced by self-movement. Without context, sensory content has no meaning.

In conclusion, Burge's identification of a current problem in theory of cognition, as well as the proposal of its solution, without also understanding the revolutionary influence of neocybernetics to this research field is, though admirable, scientifically ill-advised.

His real agenda is revealed later in his seminal tract- he is a neobehaviourist [10]. The original behaviourists claimed that to even talk of mind (the internal aspect of the brain) is unscientific because it has are no measurable features. The neo-behaviourists, ie ones which come after (and are thus fully aware) of the so-called cognitive revolution, will tolerate discussions of mind, but not of consciousness. Instead, Burge invents a theoretical tension between perception proper, and mere sensation. The kicker is that he identifies perception as a global property of mental agency, which is as valid a definition of consciousness as ever was. Here is his real business- avoiding the career-limiting aspects of mentioning the C word in professional publications, while taking full advantage of its epistemological power. The challenge in academic work is (indeed, always has been) to combine high intelligence (needed to reveal the secrets of nature) with great courage (needed to confront the hypocrisy of society). Burge has the former but not the latter.

According to Staddon [11] the prime function of behavioural observations is not to find all possible connections between stimulus and response, but to identify hidden mental states, especially computational ones.

1. FYI Engineering Structures Analysis also shares this feature, consisting of 'Statics' and 'Dynamics'. This classification scheme is 'fractal', in that Dynamics is further subdivided into Kinetics (forces) and Kinematics (geometry). My first degree was a Bachelor of Mechanical Engineering. Sometimes I use physical analogies from that domain to explain a feature of our cognitive or neural functionality.

2. Some say that these two-component (dyadic?) data vectors should even be accorded the status of a new type of hybrid [2] number system. In an earlier publication, I tentatively proposed that they should be called T-values, where T connotes the contribution of orthomorphic (ie regulatory) thresholds (cybernetic 'setpoints') to bio-plausible system specification.

3. In spite of the less than ideal 'optics' , no one really cares if there are spelling typos which don't interfere with meaning. Thats what computers are for, isn't it?

10. If one had to summarise the thematic (c.f. chronological and historical) difference between behaviourists and neobehaviourists, it is that neobehaviourists believe that repetition and reinforcement are not essential for learning.

11. Staddon, J. (2021) The New Behaviorism: Foundations of behavioral science, 3rd Edition (Psychology Press)

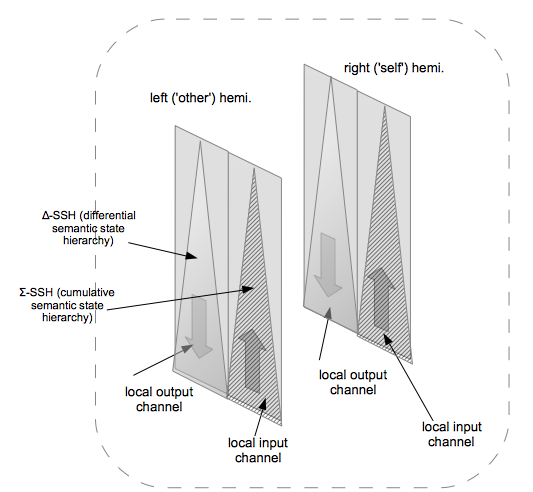

The term 'linguistic' covers syntax and semantics. In this section, the term 'syntax' is found to be of historical use only, and has been replaced with delta- or differential semantics. The term 'semantics' plays a more important role, covering some of the ground lost to 'syntax'. Semantics proper is replaced with sigma- or integral semantics. Sigma-semantics describes the cumulative (adaptational) property of the organism's current hierarchical state. Delta-semantics describes the discriminative (situational) property of the organism's current behavioural capacity.

By introducing the term delta-semantics, we avoid confusion around whether the linguistic construct which was called 'syntax' is used internally (i-syntax, as in behaviour planning of self, behavioural prediction of others, behavioural prototyping or thought in general) or externally, where there is audible or gestural speech, or legible writing [1].

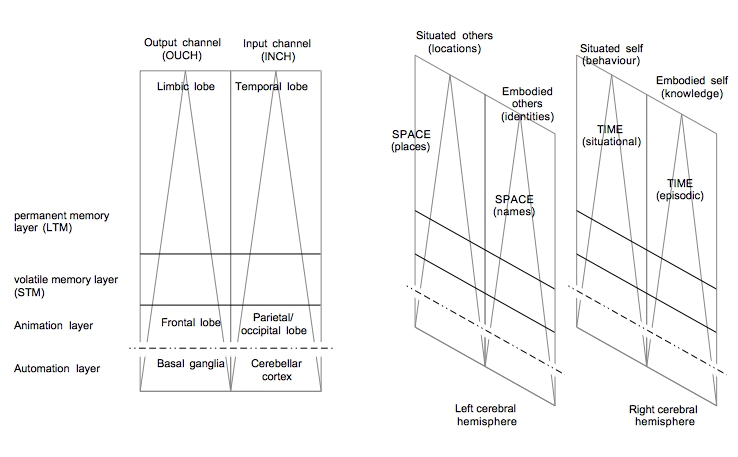

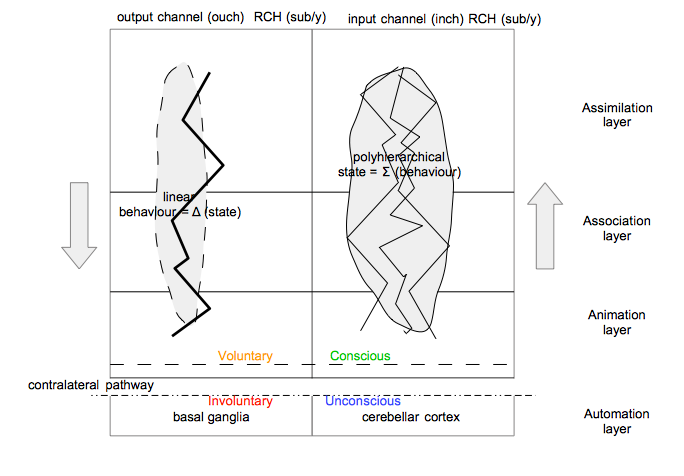

The automation layer consists of the basal ganglia on the emotional, output-channel side of the GOLEM, and the cerebellar cortex on its perceptual, input-channel side. This layer supports a series of Mealy machines. Collectively, the combination of these asynchronous Mealy machine Me-ROMs form a synchronous Moore machine reflecting the current choice of emotional vector and perceptual frame in the animation layer. It is the brain's ability to forge a synchronous whole from the sum of asynchronous parts which allow it to solve the 'hard problem' of consciousness [2].

Current and previous automation layers (ie Moore machine Mo-ROM images) are buffered during each day, because useful learning may occur at any waking time. This buffer, stored as a stack of temporal (time-based) emotional vectors, forms the short term memory or STM. By means of sleep-based memory consolidation, salient Mo-ROM records are archived as autobiographical and semantic knowledge [3], forming the individual's long-term memory (LTM).

In the figure above, all the elements for a complete GOLEM are shown in their latest assumed-correct anatomical juxtaposition [4]. Note the interesting consequences of subjective (properly: intra-subjective) and objective (properly: extra-subjective, or, perhaps equivalently, inter-subjective) phenomenality classes. The RCH contains the representational data structures (RDS's) of the embodied self. There can only be one embodied self, otherwise a etymologically schizophrenic (vs clinically schizoid) situation would exist, leading to either a frozen state of indecision or a goal-less action zombie. This leads to the customary assumption that the RCH leads with the original 'self' and the LCH follows with the other 'selves'. When there is exclusively ONE self, we must avoid ambiguous copies, so multiple copies of it can only entail a historical record of oneself's developmental stages located in the RCH. This leads to the existence of an exclusively TIME based memory area. However, when there can be MULTIPLE other selves, and we must avoid ambiguous copies, multiple copies of it can only entail a geographical record of the other selves the subjective self has personally or thematically/ epistemologically known located in the LCH (eg we all know who Santa is, though none of us have actually met him, nor ever expect to). This leads to the existence of an exclusively SPACE based memory area.

There are two cerebral hemispheres which are almost identical. However, for subjectivity to work, one hemisphere must predominate. This is almost always the RCH. When we are infants, we develop our own sense of self by translating between what situations feel like (using our RCH) and what they look like (using our LCH). For this to work in social (multi-self) contexts, our minds must have evolved by adopting the 'minimal default assumption'- ie that others have very similar internal functions to our own. Consequently, being able to view ourselves objectively, via the RCH --> LCH transformation, allows us to model the external views of others, and therefore (by extension) be able to empathize with them- i.e. model what they must be feeling. Symbolically, we propose that (RCH --> LCH) self =>> (LCH --> RCH) other.

That is, for a given embodied state of self E(0) in the RCH, we can recognise the matching situated state(s) [5] of self S(0) in the LCH. But because we all closely resemble each other, architectonically speaking (ie on a species level), it is also possible to go the other way, ie to match the situated state of the proximate other S(1) to their embodied state E(1). This is the basis of 'situational empathy', the involuntary like-mindedness of people who face similar predicaments (situational challenges).

Behaviour and Situation - the coathanger vs the clothes

Consider the figure immediately above. Also consider the following analogy- that the personality (character traits without memory) is the coathanger and the behaviour is the clothes. Averaged over the species, we can speak of a standard (c.f. normal distribution) personality, so behaviour changes only to suit the situation, once we adopt this statistical 'kludge'. Therefore we can posit that mental state (= knowledge + memory) is an accumulation of behaviour plans (knowledge) plus situation plots (memories). But once you know the situation/ stimulus, you can predict the behaviour/ response [6], on average.

Why this is important, is that later, when we claim that language is an externalised form of behaviour planning, we will also claim that syntax and semantics have inherited their differential::cumulative relationship from internal mental models of behaviour and state, as per the figure above.

1. it may even be a trivial task for a skilled, resourced software manufacturer like Google's 'skunkworks'. Perhaps the clandestine 'dirty tricks' spyworks of the US or People's Republic of China have already done it.

2. as popularised by Australian philosopher, and my fellow Flinders University alumnus, Professor David Chalmers.

3. discovered by Endel Tulving, Canadian CogSci Nobel laureate

4. Hi! almost there. There is enough detail in this latest figure for a clever person to build clones of these monsters and conquer the world with a clone army.

5. We must admit to the possibility of multiple situated states for each embodied state, one for each person we know.

6. we humbly request the reader's indulgence that we be permitted to drop into behaviourist parlance occasionally, should the circumstance allow it.